Few Shot Domain Adaptation Tongue Color Classification in Traditional Chinese Medicine via Two-stage Meta-learning

-

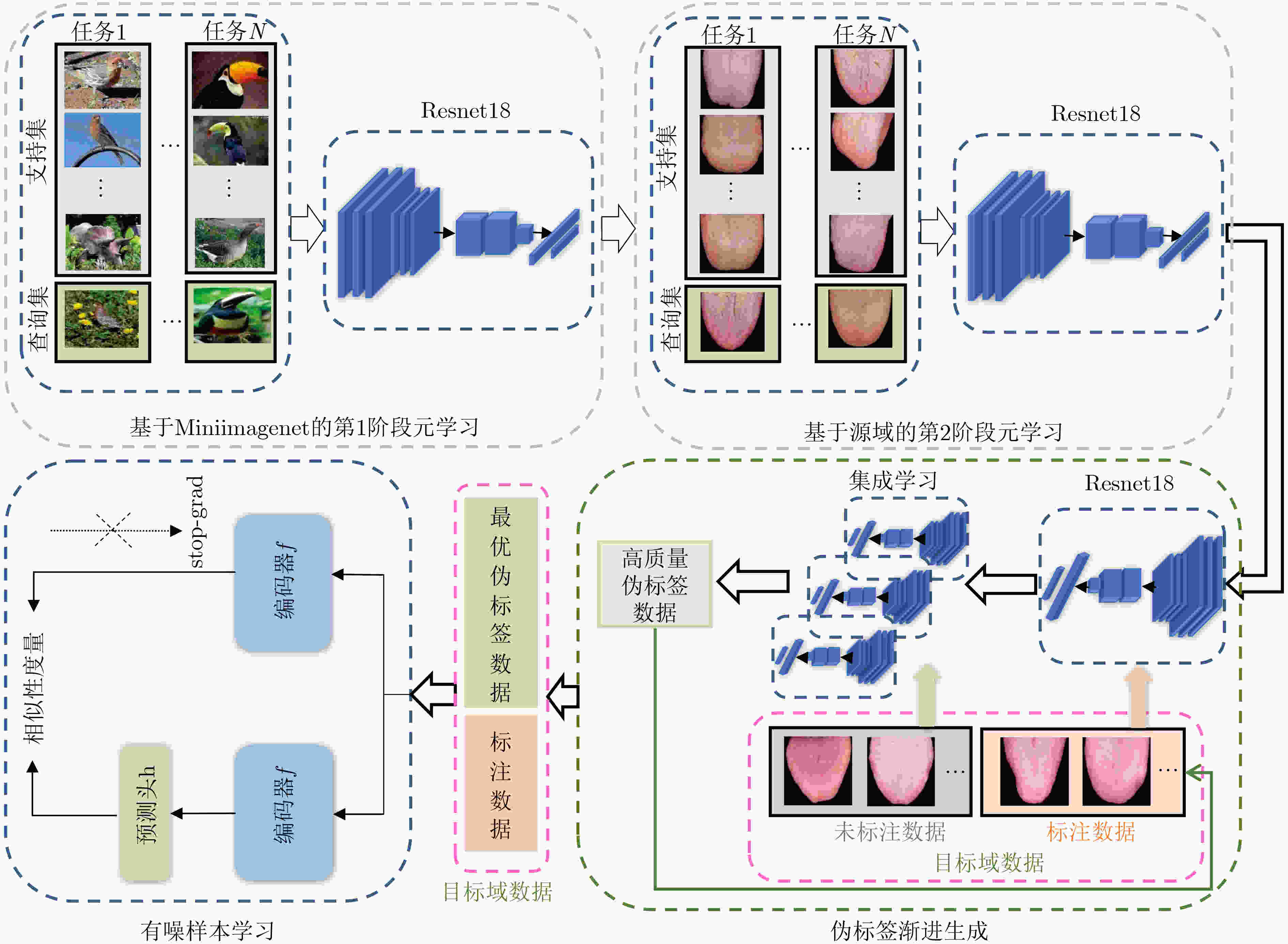

摘要: 舌色是中医(TCM)望诊最关注的诊察特征之一。在实际应用中,通过一台设备采集到的舌象数据训练得到的舌色分类模型应用于另一台设备时,由于舌象数据分布特性不一致,分类性能往往急剧下降。为此,该文提出一种基于双阶段元学习的小样本中医舌色域自适应分类方法。首先,设计了一种双阶段元学习训练策略,从源域有标注样本中提取域不变特征,并利用目标域的少量有标注数据对网络模型进行微调,使得模型可以快速适应目标域的新样本特性,提高舌色分类模型的泛化能力并克服过拟合。接下来,提出了一种渐进高质量伪标签生成方法,利用训练好的模型对目标域的未标注样本进行预测,从中挑选出置信度高的预测结果作为伪标签,逐步生成高质量的伪标签。最后,利用这些高质量的伪标签,结合目标域的有标注数据对模型进行训练,得到舌色分类模型。考虑到伪标签中含有噪声问题,采用了对比正则化函数,可以有效抑制噪声样本在训练过程中产生的负面影响,提升目标域舌色分类准确率。在两个自建中医舌色分类数据集上的实验结果表明,在目标域仅提供20张有标注样本的情况下,舌色分类准确率达到了91.3%,与目标域有监督的分类性能仅差2.05%。Abstract: Tongue color is one of the most concerning diagnostic features of tongue diagnosis in Traditional Chinese Medicine (TCM). In practical applications, the performance of the model trained from the tongue data acquired by one device is dramatically degraded when applied to other devices due to the data distribution discrepancy. Therefore, in this paper, a few shot domain adaptation tongue color classification method with two-stage meta-learning is proposed. Firstly, a two-stage meta-learning training strategy is proposed to extract domain invariant features from labeled samples in the source domain, and then, the meta-trained network model is fine-tuned using a few labeled data in the target domain, so that the model can quickly adapt to the new sample characteristics in the target domain, improving the generalization ability of the tongue color classification model and avoid overfitting problem. Next, a progressive pseudo label generation strategy is proposed, which uses the meta-trained model to predict the unlabeled samples in the target domain. The prediction results with high confidence are selected and treated as pseudo labels. So high-quality pseudo labels can be gradually generated. Finally, these high-quality pseudo labels are used to train the model, together with the labeled data in the target domain. The tongue color classification model can be obtained. Considering the noisy pseudo labels, the contrast regularization function is adopted, which can effectively suppress the negative impact of noisy samples in the training process and improve the tongue color classification accuracy in the target domain. The experimental results on two self-established TCM tongue color classification datasets show that the classification accuracy of tongue color in the target domain reaches 91.3% when only 20 labeled samples are given in the target domain, which is only 2.05% lower than that of the supervised classification model in the target domain.

-

表 1 两个自建数据集的样本类别和数量

数据集 类别和数量 总数 淡红 红 暗红 暗紫 SIPL-A 130 130 114 36 410 SIPL-B 200 190 110 35 535 表 2 采用有监督学习方法得到的两个数据集分类精度(%)

淡红舌 红舌 暗红舌 暗紫舌 整体精度 SIPL-A 91.25 92.48 96.80 100.00 94.03 SIPL-B 93.90 93.19 95.65 85.71 93.35 表 3 不同训练策略对伪标签准确率的影响(%)

训练策略 伪标签准确率 策略1 66.5 策略2 76.8 策略3 74.8 策略4 79.9 策略5 82.0 表 4 不同目标域有标注样本数量对伪标签准确率的影响(%)

目标域有标注样本 训练策略 伪标签准确率 1例 双阶段元学习 78.6 3例 双阶段元学习 80.7 5例 双阶段元学习 82.0 表 5 不同元学习方法对伪标签准确率的影响(%)

元学习方法 单阶段元学习

伪标签准确率双阶段元学习

伪标签准确率Matching networks 77.2 78.5 Relation network 77.8 79.8 SNAML 78.5 81.2 Online MAML 79.5 81.7 本文方法 79.9 82.0 表 6 不同伪标签生成方法的结果对比

方法 伪标签数量 伪标签

准确率 (%)一次性伪标签生成 / 82.00 一次性伪标签生成+模型集成 / 83.96 渐进伪标签生成+置信度阈值 201 85.50 渐进伪标签生成+置信度阈值+模型集成 146 87.00 表 7 渐进高质量伪标签生成的轮次结果对比

方法 高质量伪标签 伪标签准确率 (%) 第1轮 146 87.00 第2轮 140 88.10 第3轮 145 88.19 第4轮 148 88.16 表 8 不同有噪样本学习方法的分类结果对比(%)

方法 基于最优伪标签+少量标注的分类准确率 直接训练 87.50 GCE 90.01 PENCIL 87.76 SCE 90.25 Co-teaching 89.86 Co-teaching+ 89.98 CTR 90.35 CTR+GCE 90.80 本文方法(CTR+SCE) 91.30 表 9 不同域自适应方法的结果对比(%)

方法 分类准确率 MME 82.6 CDAC 83.8 Adamatch 89.1 本文方法 91.3 表 10 消融实验(%)

ResNet18 双阶段元

学习渐进高质量

伪标签生成CTRR+SCE 分类

准确率√ 76.8 √ √ 82.3 √ √ √ 87.5 √ √ √ √ 91.3 -

[1] 孙亮亮. 有噪声标注下的中医舌色分类研究[D]. [硕士论文], 北京工业大学, 2022.SUN Liangliang. Research on the classification of TCM tongue color under noisy labeling[D]. [Master dissertation], Beijing University of Technology, 2022. [2] HOU Jun, SU Hongyi, YAN Bo, et al. Classification of tongue color based on CNN[C]. 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), Beijing, China, 2017: 725–729. [3] FU Shengyu, ZHENG Hong, YANG Zijiang, et al. Computerized tongue coating nature diagnosis using convolutional neural network[C]. 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), Beijing, China, 2017: 730–734. [4] QU Panling, ZHANG Hui, ZHUO Li, et al. Automatic analysis of tongue substance color and coating color using sparse representation-based classifier[C]. 2016 International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 2016: 289–294. [5] LI Yanping, ZHUO Li, SUN Lianglian, et al. Tongue color classification in TCM with noisy labels via confident-learning-assisted knowledge distillation[J]. Chinese Journal of Electronics, 2023, 32(1): 140–150. doi: 10.23919/cje.2022.00.040. [6] 卓力, 孙亮亮, 张辉, 等. 有噪声标注情况下的中医舌色分类方法[J]. 电子与信息学报, 2022, 44(1): 89–98. doi: 10.11999/JEIT210935.ZHUO Li, SUN Liangliang, ZHANG Hui, et al. TCM tongue color classification method under noisy labeling[J]. Journal of Electronics &Information Technology, 2022, 44(1): 89–98. doi: 10.11999/JEIT210935. [7] GRETTON A, SMOLA A, HUANG Jiayuan, et al. Covariate shift by kernel mean matching[M]. QUIÑONERO-CANDELA J, SUGIYAMA M, SCHWAIGHOFER A, et al. Dataset Shift in Machine Learning. Cambridge: The MIT Press, 2008: 131–160. [8] GOPALAN R, LI Ruonan, and CHELLAPPA R. Domain adaptation for object recognition: An unsupervised approach[C]. 2011 International Conference on Computer Vision, Barcelona, Spain, 2011: 999–1006. [9] PAN S J, TSANG I W, KWOK J T, et al. Domain adaptation via transfer component analysis[J]. IEEE Transactions on Neural Networks, 2011, 22(2): 199–210. doi: 10.1109/TNN.2010.2091281. [10] JHUO I H, LIU Dong, LEE D T, et al. Robust visual domain adaptation with low-rank reconstruction[C]. 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, USA, 2012: 2168–2175. [11] GRETTON A, BORGWARDT K M, RASCH M, et al. A kernel method for the two-sample-problem[C]. The 19th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2006: 513–520. [12] SUN Baochen, FENG Jianshi, and SAENKO K. Correlation alignment for unsupervised domain adaptation[M]. CSURKA G. Domain Adaptation in Computer Vision Applications. Cham: Springer, 2017: 153–171. [13] KULLBACK S and LEIBLER R A. On information and sufficiency[J]. The Annals of Mathematical Statistics, 1951, 22(1): 79–86. doi: 10.1214/aoms/1177729694. [14] KANG Guoliang, JIANG Lu, YANG Yi, et al. Contrastive adaptation network for unsupervised domain adaptation[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Los Angeles, USA, 2019: 4888–4897. [15] GLOROT X, BORDES A, and BENGIO Y. Domain adaptation for large-scale sentiment classification: A deep learning approach[C]. The 28th International Conference on International Conference on Machine Learning, Bellevue, USA, 2011: 513–520. [16] GANIN Y and LEMPITSKY V. Unsupervised domain adaptation by backpropagation[C]. The 32nd International Conference on International Conference on Machine Learning, Lille, France, 2015: 1180–1189. [17] ZHANG Xu, YU F X, CHANG S F, et al. Deep transfer network: Unsupervised domain adaptation[EB/OL]. https://arxiv.org/abs/1503.00591, 2015. [18] SAITO K, KIM D, SCLAROFF S, et al. Semi-supervised domain adaptation via minimax entropy[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 2019: 8049–8057. [19] PÉREZ-CARRASCO M, PROTOPAPAS P, and CABRERA-VIVES G. Con2DA: Simplifying semi-supervised domain adaptation by learning consistent and contrastive feature representations[C]. 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Sydney, Australia, 2021: 1558–1569. doi: 10.48550/arXiv.2204.01558 [20] LI Jichang, LI Guanbin, SHI Yemin, et al. Cross-domain adaptive clustering for semi-supervised domain adaptation[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 2505–2514. [21] BERTHELOT D, ROELOFS R, SOHN K, et al. AdaMatch: A unified approach to semi-supervised learning and domain adaptation[C]. The Tenth International Conference on Learning Representations, 2022: 4732–4782. [22] MISHRA N, ROHANINEJAD M, CHEN Xi, et al. A Simple neural attentive meta-learner[C]. 6th International Conference on Learning Representations, Vancouver, Canada, 2018: 3141–3158. [23] XU Zhixiong, CHEN Xiliang, TANG Wei, et al. Meta weight learning via model-agnostic meta-learning[J]. Neurocomputing, 2021, 432: 124–132. doi: 10.1016/j.neucom.2020.08.034. [24] WANG Yisen, MA Xingjun, CHEN Zaiyi, et al. Symmetric cross entropy for robust learning with noisy labels[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 2019: 322–330. [25] YI Li, LIU Sheng, SHE Qi, et al. On learning contrastive representations for learning with noisy labels[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 16661–16670. [26] VINYALS O, BLUNDELL C, LILLICRAP T, et al. Matching networks for one shot learning[C]. The 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 2016: 3637–3645. doi: 10.48550/arXiv.1606.04080 [27] SUNG F, YANG Yongxin, ZHANG Li, et al. Learning to compare: Relation network for few-shot learning[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1199–1208. [28] FINN C, RAJESWARAN A, KAKADE S, et al. Online meta-learning[C]. The 36th International Conference on Machine Learning, Long Beach, USA, 2019: 1920–1930. -

下载:

下载: