Local Destination Pooling Network for Pedestrian Trajectory Prediction of Condition Endpoint

-

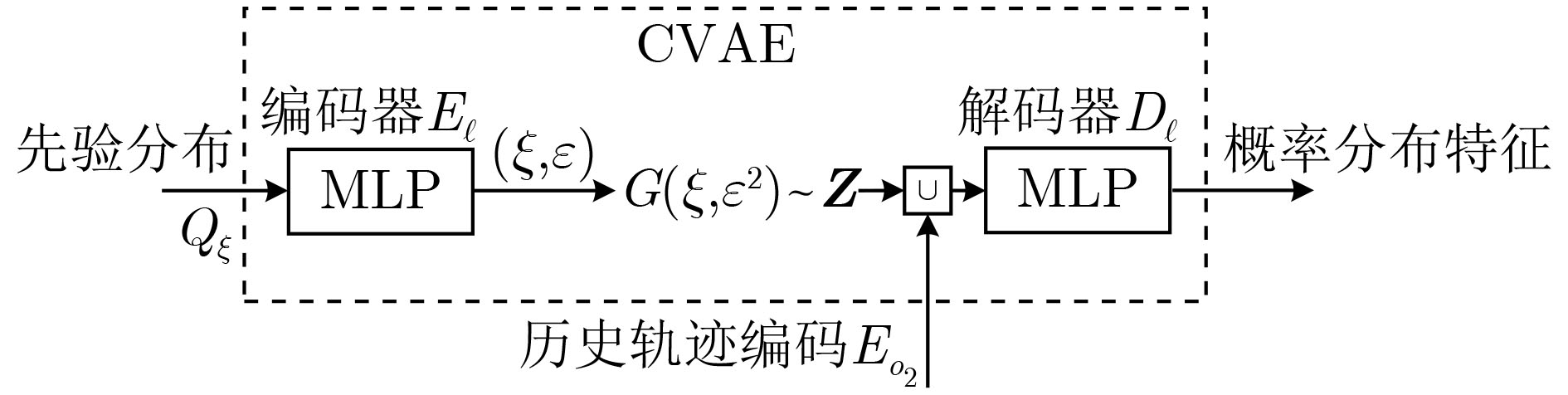

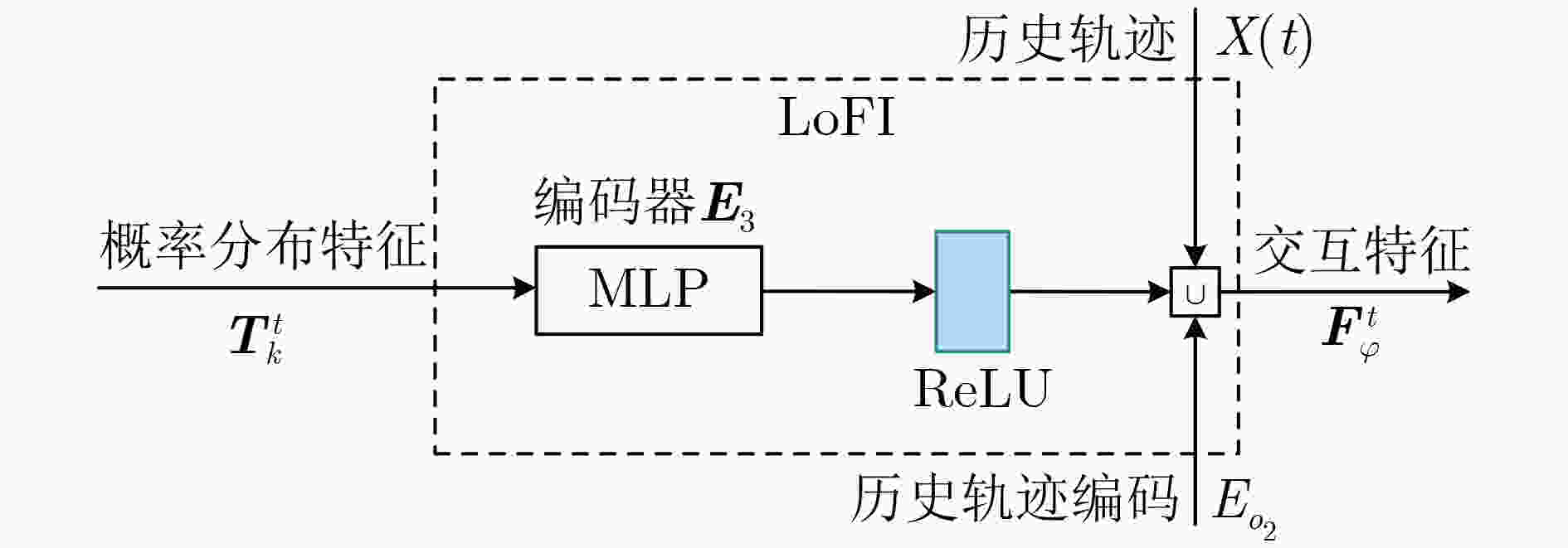

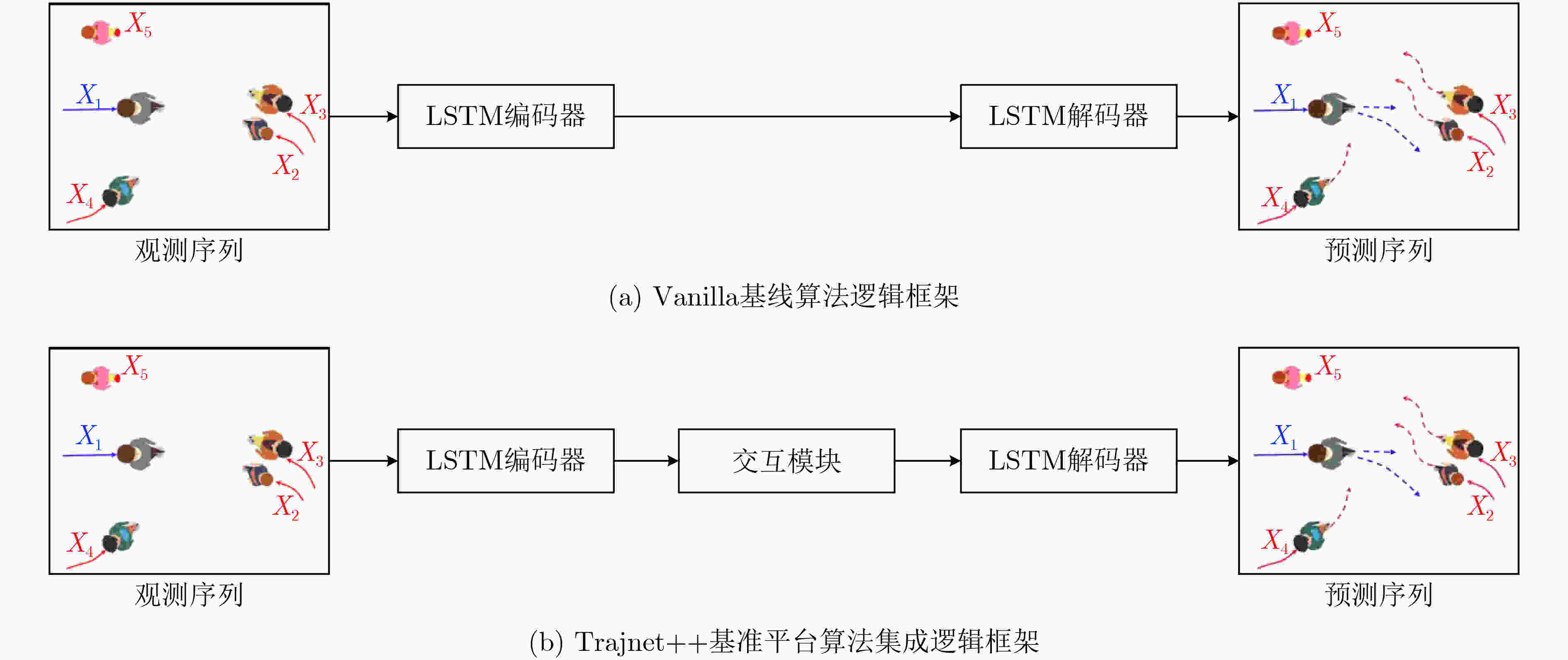

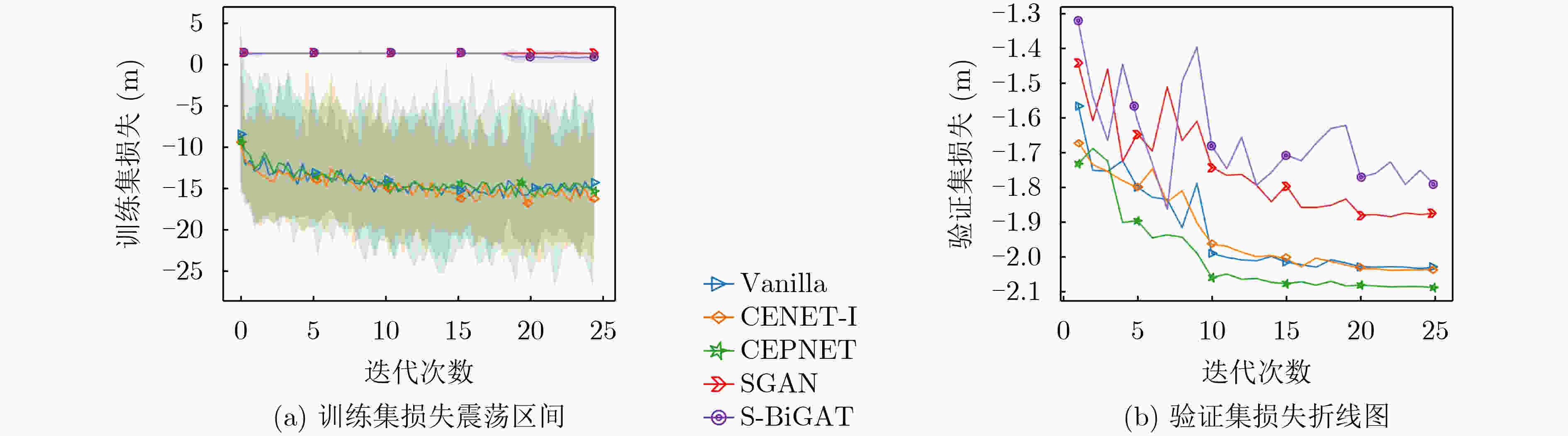

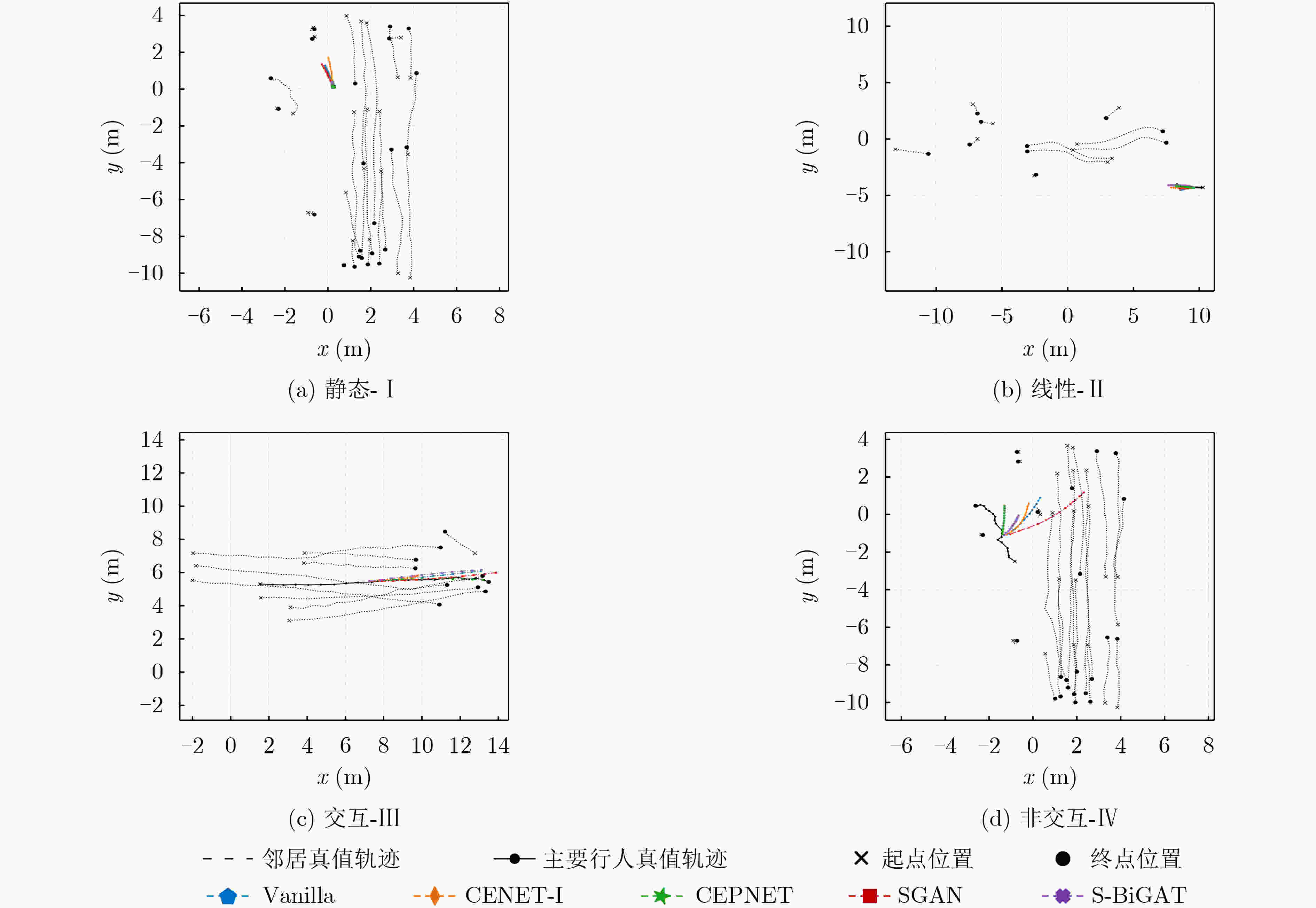

摘要: 轨迹预测是自动驾驶系统中的核心任务之一。现阶段基于深度学习的轨迹预测算法,涉及目标的信息表示、环境感知和运动推理。针对现有轨迹预测模型在运动推理过程中对行人社交动机考虑不足,无法有效预知场景中行人在不同社交条件下局部目的地的问题,该文提出一种条件端点局部目的地池化网络(CEPNET)。该网络通过条件变分自编码器推理潜在轨迹分布空间来学习历史观测轨迹在特定场景中的概率分布,构建条件端点局部特征推理算法,将条件端点作为局部目的地特征进行相似性特征编码,利用社交池化网络过滤掉场景中的干扰信号,融入自注意力社交掩码来增强行人的自我注意力。为验证算法各模块的可靠性,使用公开的行人鸟瞰数据集(BIWI)和塞浦路斯大学多人轨迹数据集(UCY)对CEPNET进行消融实验,并与平凡长短时记忆网络(Vanilla)、社交池化生成对抗网络(SGAN)和图注意力生成对抗网络(S-BiGAT)等先进轨迹预测算法进行对比分析。在Trajnet++基准上的实验结果表明,CEPNET算法性能优于现有先进算法,并且与基准算法Vanilla相比,平均位移误差(ADE)降低22.52%,最终位移误差(FDE)降低20%,预测碰撞率Col-I降低9.75%,真值碰撞率Col-II降低9.15%。Abstract: Trajectory prediction is one of the core tasks in automatic driving system. At present, trajectory prediction algorithms based on deep learning involve information representation, perception and motion reasoning of targets. Considering the problem that the existing trajectory prediction models does not take into account the social motivation of pedestrians and can not effectively predict the local destination of pedestrians in different social conditions in the scene, a Conditional Endpoint local destination Pooling NETwork (CEPNET) is proposed. The network uses conditional variational autoencoder to map out the potential distribution in space, which can study the observation of the history track probability distribution in the specific scene. And then a local feature inference algorithm is built to code the similarity features of conditional endpoint as local destination features. Finally, the interference signals in the scene are filtered out by social pooling network. At the same time, self-attention social mask is used to enhance pedestrian’s self-attention. In order to verify the reliability of each module of the algorithm, the public datasets of Walking pedestrians In busy scenarios from a BIrd eye view(BIWI) and University of CYprus multi-person trajectory (UCY) are used to conduct ablation experiments, and compared with advanced trajectory prediction algorithms such as Vanilla, Socially acceptable trajectories with Generative Adversarial Networks (SGAN) and multimodal Trajectory forecasting using Bicycle-GAN and Graph Attention networks(S-BiGAT). The experimental results on the Trajnet++ benchmark show that compared with the benchmark Vanilla algorithm, the Average Displacement Error (ADE) is reduced by 22.52%, the Final Displacement Error (FDE) is reduced by 20%, the predicted collision rate Col-I is reduced by 9.75%, and the true collision rate Col-II is reduced by 9.15%.

-

表 1 模型迭代学习率配置

迭代次数 1~9 10~18 19~25 学习率 10-3 10-4 10-5 表 2 各算法模型迭代平均运行时间(min)

SGAN S-BiGAT Vanilla CENET-I(本文) CEPNET(本文) 平均运行时间 38.38 151.68 2.97 66.18 59.09 表 3 CEPTNET与其他算法在ETH和UCY数据集上的定量结果

数据集 SGAN S-BiGAT Vanilla(Baseline) CENET-I(本文) CEPNET(本文) ADE/FDE Col-I Col-II ADE/FDE Col-I Col-II ADE/FDE Col-I Col-II ADE/FDE Col-I Col-II ADE/FDE Col-I Col-II ETH 0.66/1.30 7.40 8.98 0.96/1.79 7.31 11.62 0.99/1.89 12.06 12.59 1.22/2.47 9.77 10.92 0.66/1.34 9.42 8.45 Hotel 0.44/0.84 5.66 5.66 0.84/1.52 3.77 5.66 0.85/1.60 7.55 1.89 0.85/1.61 3.77 3.77 0.51/1.02 5.66 3.77 Univ 0.69/1.50 5.33 5.33 0.61/1.36 2.46 4.51 0.63/1.44 2.05 2.87 0.69/1.64 2.46 2.87 0.60/1.39 2.46 2.05 Zara1 0.43/0.90 4.20 8.39 0.46/0.98 0.7 11.89 0.42/0.98 8.39 8.39 0.41/0.88 7.69 9.79 0.39/0.84 6.99 7.69 Zara2 0.53/1.16 14.25 14.25 0.50/1.12 8.39 16.68 0.48/1.10 14.56 15.25 0.47/1.05 15.41 15.2 0.45/1.02 14.67 15.30 均值 0.55/1.14 7.37 8.52 0.67/1.36 4.53 10.07 0.68/1.40 8.92 8.20 0.72/1.49 7.82 9.11 0.52/1.12 8.05 7.45 注:Datasets属性下的粗体为未参与训练的测试集名称;红色为最低误差值,蓝色为第2低误差值。 表 4 不同场景下4种交互类别的预测值评估结果

类型 模型 场景序号 ADE (m) FDE (m) Col-I (%) Col-II(%) I SGAN 102 0.20 0.41 11.76 6.68 I S-BiGAT 102 0.22 0.47 8.82 10.78 I Vallina(Baseline) 102 0.21 0.46 16.67 9.80 I CENET-I(本文) 102 0.22 0.50 12.75 12.75 I CEPNET(本文) 102 0.13(↓38.1%) 0.30(↓34.8%) 6.86(↓59.9%) 6.68(↓31.8%) II SGAN 779 0.40 0.80 11.81 11.42 II S-BiGAT 779 0.46 0.91 7.75 11.68 II Vallina(Baseline) 779 0.46 0.91 11.8 13.22 II CENET-I(本文) 779 0.53 1.11 12.07 10.14 II CEPNET(本文) 779 0.32(↓30.4%) 0.69(↓24.2%) 11.17(↓5.6%) 9.50(↓28.1%) III SGAN 1734 0.61 1.28 14.24 13.67 III S-BiGAT 1734 0.72 1.49 9.63 15.63 III Vallina(Baseline) 1734 0.74 1.54 15.92 16.03 III CENET-I(本文) 1734 0.83 1.77 15.51 16.03 III CEPNET(本文) 1734 0.61(↓17.6%) 1.31(↓14.9%) 15.4(↓3.27%) 15.5(↓3.30%) IV SGAN 660 0.71 1.50 4.85 5.91 IV S-BiGAT 660 0.86 1.78 3.18 6.36 IV Vallina(Baseline) 660 0.82 1.74 5.76 7.27 IV CENET-I(本文) 660 0.84 1.79 5.00 7.42 IV CEPNET(本文) 660 0.66(19.5%) 1.44(↓17.2%) 3.48(↓39.6%) 6.36(↓12.5%) 注:红色为最低误差值,蓝色为第2低误差值。 -

[1] CHEN Changan, LIU Yuejiang, KREISS S, et al. Crowd-robot interaction: Crowd-aware robot navigation with attention-based deep reinforcement learning[C]. 2019 International Conference on Robotics and Automation (ICRA), Montreal, Canada, 2019: 6015–6022. [2] RASOULI A and TSOTSOS J K. Autonomous vehicles that interact with pedestrians: A survey of theory and practice[J]. IEEE Transactions on Intelligent Transportation Systems, 2020, 21(3): 900–918. doi: 10.1109/TITS.2019.2901817 [3] BITGOOD S. An analysis of visitor circulation: Movement patterns and the general value principle[J]. Curator:The Museum Journal, 2006, 49(4): 463–475. doi: 10.1111/j.2151-6952.2006.tb00237.x [4] HORNI A, NAGEL K, and AXHAUSEN K W. The Multi-Agent Transport Simulation MATSim[M]. London: Ubiquity Press, 2016: 355–361. [5] DONG Hairong, ZHOU Min, WANG Qianling, et al. State-of-the-art pedestrian and evacuation dynamics[J]. IEEE Transactions on Intelligent Transportation Systems, 2020, 21(5): 1849–1866. doi: 10.1109/TITS.2019.2915014 [6] ALAHI A, GOEL K, RAMANATHAN V, et al. Social LSTM: Human trajectory prediction in crowded spaces[C]. 2016 IEEE conference on computer vision and pattern recognition (CVPR), Las Vegas, USA, 2016: 961–971. [7] BISAGNO N, ZHANG Bo, and CONCI N. Group LSTM: Group trajectory prediction in crowded scenarios[C]. European Conference on Computer Vision, Munich, Germany, 2018: 213–225. [8] PELLEGRINI S, ESS A, SCHINDLER K, et al. You'll never walk alone: Modeling social behavior for multi-target tracking[C]. 2009 IEEE 12th International Conference on Computer Vision (ICCV), Kyoto, Japan, 2009: 261–268. [9] LERNER A, CHRYSANTHOU Y, and LISCHINSKI D. Crowds by example[J]. Computer Graphics Forum, 2007, 26(3): 655–664. doi: 10.1111/j.1467-8659.2007.01089.x [10] XUE Hao, HUYNH D Q, and REYNOLDS M. SS-LSTM: A hierarchical LSTM model for pedestrian trajectory prediction[C]. 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, USA, 2018: 1186–1194. [11] CHEUNG E, WONG T K, BERA A, et al. LCrowdV: Generating labeled videos for simulation-based crowd behavior learning[C]. European Conference on Computer Vision, Amsterdam, Netherlands, 2016: 709–727. [12] BARTOLI F, LISANTI G, BALLAN L, et al. Context-aware trajectory prediction[C]. 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 2018: 1941–1946. [13] GUPTA A, JOHNSON J, LI Feifei, et al. Social GAN: Socially acceptable trajectories with generative adversarial networks[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, USA, 2018: 2255–2264. [14] FERNANDO T, DENMAN S, SRIDHARAN S, et al. GD-GAN: Generative adversarial networks for trajectory prediction and group detection in crowds[C]. Asian Conference on Computer Vision, Perth, Australia, 2018: 314–330. [15] VAN DER MAATEN L. Accelerating t-SNE using tree-based algorithms[J]. The Journal of Machine Learning Research, 2014, 15(1): 3221–3245. [16] KOSARAJU V, SADEGHIAN A, MARTÍN-MARTÍN R, et al. Social-BiGAT: Multimodal trajectory forecasting using bicycle-GAN and graph attention networks[C]. The 33rd International Conference on Neural Information Processing Systems, Vancouver, Canada, 2019: 137–146. [17] MANGALAM K, GIRASE H, AGARWAL S, et al. It is not the journey but the destination: Endpoint conditioned trajectory prediction[C]. European Conference on Computer Vision, Glasgow, United Kingdom, 2020: 759–776. [18] SALZMANN T, IVANOVIC B, CHAKRAVARTY P, et al. Trajectron++: Dynamically-feasible trajectory forecasting with heterogeneous data[C]. European Conference on Computer Vision, Glasgow, United Kingdom, 2020: 683–700. [19] KOTHARI P, KREISS S, and ALAHI A. Human trajectory forecasting in crowds: A deep learning perspective[J]. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(7): 7386–7400. doi: 10.1109/TITS.2021.3069362 [20] LEE N, CHOI W, VERNAZA P, et al. DESIRE: Distant future prediction in dynamic scenes with interacting agents[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 336–345. [21] KINGMA D P and WELLING M. Auto-encoding variational Bayes[C]. International Conference on Learning Representations ICLR 2014 Conference Track (ICLR), Banff, Canada, 2014: 1–14. -

下载:

下载: